- Andrew Leverett

- Product Owner for IT2, ION Treasury

- Andy Harris

- Senior Vice President Engineering, ION Treasury

- Tom Alford

- Deputy Editor, Treasury Management International

Practical Considerations for API Adoption

APIs are touted as a vital data link between a business and its partners. But what are the benefits, the practical demands of their adoption and how can they be most effectively managed now and in the future? Andy Harris, Senior Vice President Engineering, ION Treasury, and Andrew Leverett, Product Owner for IT2 at ION Treasury, consider the sharp end of API adoption.

For those treasurers who have yet to see or understand what all the fuss is about with APIs, they offer three main benefits, notes Harris. The most obvious is real-time system integration, with APIs providing the ability to automatically service requests for data or to maintain data, and to deliver success status or actionable error messages – almost immediately. As he explains: “It’s just generally faster and more reliable to get data into or out of a system via an API than by file transfer.”

Less obvious, but perhaps even more important than speed, is their inherent security. “APIs are more secure than files because files can be accessed from file systems or tampered with, whereas APIs are machine-to-machine, and a well-designed API supports secure communication including both authentication standards and authorisation standards,” adds Harris.

The third major benefit is around their business intelligence application. “APIs can provide the user with the ability to access their data in a very flexible way,” he comments. Indeed, they can be used, for example, to extract data into downstream systems on an ad hoc or regular schedule, or for data mining using common business tools such as Power BI or Excel. And that flexibility, he adds, extends to likely future use cases too.

For the purposes of this article, an API is taken as an integration point embedded within a system, such as a TMS, to facilitate data flows with other systems capable of calling APIs. For example, Power BI can pull data via an API within the user’s TMS to allow the user full access to their treasury data. In another example, an API could be used to establish a direct automated system-to-system data exchange with an external source such as a banking portal.

As an alternative to direct integration, middleware – in the form of an API management platform – can be used. There is often an extra cost to implementing one of these, warns Harris, but he adds that they can ease data transformation and error handling, and often support multiple routing, and workflow modelling. The scale and cost of some of these platforms often tends to see them adopted only at enterprise-level.

One of the greatest aids to API adoption is the ability to access them in a standard way. Harris suggests that current best practice is based around the REST (Representational State Transfer) framework, and support for an easy to use syntax, for example OData. REST is commonly used in web and mobile applications and is generally deemed to be more flexible than SOAP (Simple Object Access Protocol).

First connections

APIs can be used for a huge range of purposes and data security should always be the primary consideration.

Once a tool such as Power BI is integrated with your TMS, it should not generally require further technical changes for additional data mining use cases provided that the API has the required functional coverage. But if the goal is to build new integration points - perhaps having integrated with one banking portal the decisions is made to integrate to another portal, a confirmation system, or to the user’s own ERP – then it will be necessary to build a new integration point within the calling system to call the target API with the facility to prove the caller’s identity to the target API.

APIs should be well documented, states Harris. The documentation should provide call formats, details of possible response codes, and examples of how to call the API, and should ideally be documented to the OpenAPI specification.

Appropriate documentation is particularly important for those who wish to undertake most of the ongoing integration work internally because without it, companies may face the cost of engaging consultants, and prolonged effort when needing to create new integration points or modify existing ones.

While tools for testing APIs, such as Postman, can be helpful, Harris suggest that the first integration will almost always need some help from the API vendor, to understand how to connect APIs. The authentication aspect in particular, he notes, can be a technical challenge (see below). “Once you’ve cracked that, then calling the same API to make different requests should largely be a case of following the documentation.”

Before setting out to consume APIs, Harris cautions that it would also be prudent to make sure that you have budgeted for integration point licensing, and perhaps user licensing. While this may not be necessary, as users may be free to configure and publish additional APIs themselves, it “makes commercial sense” to at least check the position of each vendor to understand your future costs upfront based on your expected future use cases.

Cracking the code conundrum

While data mining with an API tends not to require much work once authentication is resolved, imports of data will always require some new code or configuration in the calling system, notes Harris. And while he accepts that “nothing is 100% configurable”, the flexibility of data formats that an API can support “can be quite a differentiator among vendors” and can sometimes mean configuration in the API rather than more significant code changes in the calling system.

Indeed, he continues: “If a new integration point is required, and the API vendor needs to write code in the product, then release a new version of its software to the client, to be blunt, that is pretty poor. It would mean that client spending more time and money to achieve what they want.”

When consuming APIs, it should not be necessary to require users to understand how it works technically in order to integrate with it. If there is a need to send data from an ERP to a TMS via an API, then there would be a requirement to carry out some work in that ERP, or use middleware to route data between the two.

“It would be naïve to believe that there’s no integration costs for extensibility, but the degree by which it varies can be quite substantial,” states Harris. “Typically, there is no need to be overly technical to use APIs for system integration or data mining. But there is some intellectual involvement, depending on the systems with which you’re working. But if you need to have expert programming skills to consume an API then, in my opinion, it’s not a good API.”

That said, Harris acknowledges that “there’s always some kind of discussion to be had with IT” on integration points, as a minimum in respect to proof of identity and security. “If a TMS provides an API which supports 1,000 different types of calls that can be made, for any one of those calls the caller will need to prove its identity to the API. Once it has been set up for one call though, it should be the same for the other 999.”

Who are you?

Traditionally, logging into a system might require manual entry of a username and password, and possibly a second factor, such as a code sent to a mobile device. But for system-to-system integration, where for instance an ERP automatically connects to a TMS, when the ERP makes a call, the TMS’ API will require proof of identity.

Current best practice here is use of a digital certificate, says Harris. This is a highly secure way of proving identity, via a certificate that is securely held in a certificate store. Certificates are typically installed by system administrators so users wouldn’t have access.

A well-designed API should support the industry-standard OAuth 2.0 protocol to prove the identity of the system/user accessing the API. The calling system should be designed to automatically request an access token from a token issuer, using the certificate to prove its identity, to gain a short-lived token to access the desired API and the API should be configured to trust tokens issued by the token issuer and provide access rights to functionality and data appropriate for the role claims in the token.

The least appropriate means of API identification today – and therefore a point worth questioning – is the use of a hard-coded username and password in the request. “These may be typed in or stored as plain text in a file. Many APIs still use this model, but there can be security risks with this method of proving identity as the credentials may not be held securely,” warns Harris.

However, he continues, authentication must partner with authorisation. “An API should support the ‘principle of least privilege’ (PoLP) access, so that the user can only access the data they need to meet their requirements, and only in the way that is needed.” As an example, he says some system administrators may want an API called by a specific user to have access to a limited set of data, perhaps for a subset of companies, whereas the same API may be called by another user with broader or different access rights.

Not all APIs are equal

Adopting APIs can begin to sound rather daunting, and the idea that treasury teams should be upskilling to meet new demands has been mooted many times in TMI articles. For Harris, treasurers should at least be conversant with the capabilities of APIs. “I doubt many treasurers are equipped today to build an integration between a bank portal and a TMS – that will probably require professional services from a vendor, someone from IT, or a technically skilled person. But for most, if they want to data mine using a well designed and documented API, then I think they can quickly learn how to do that using a tool such as Power BI.”

From a treasury perspective then, now is a good time to at least learn enough to understand what constitutes good API practice, and how this technology can be leveraged in the most pain-free way.

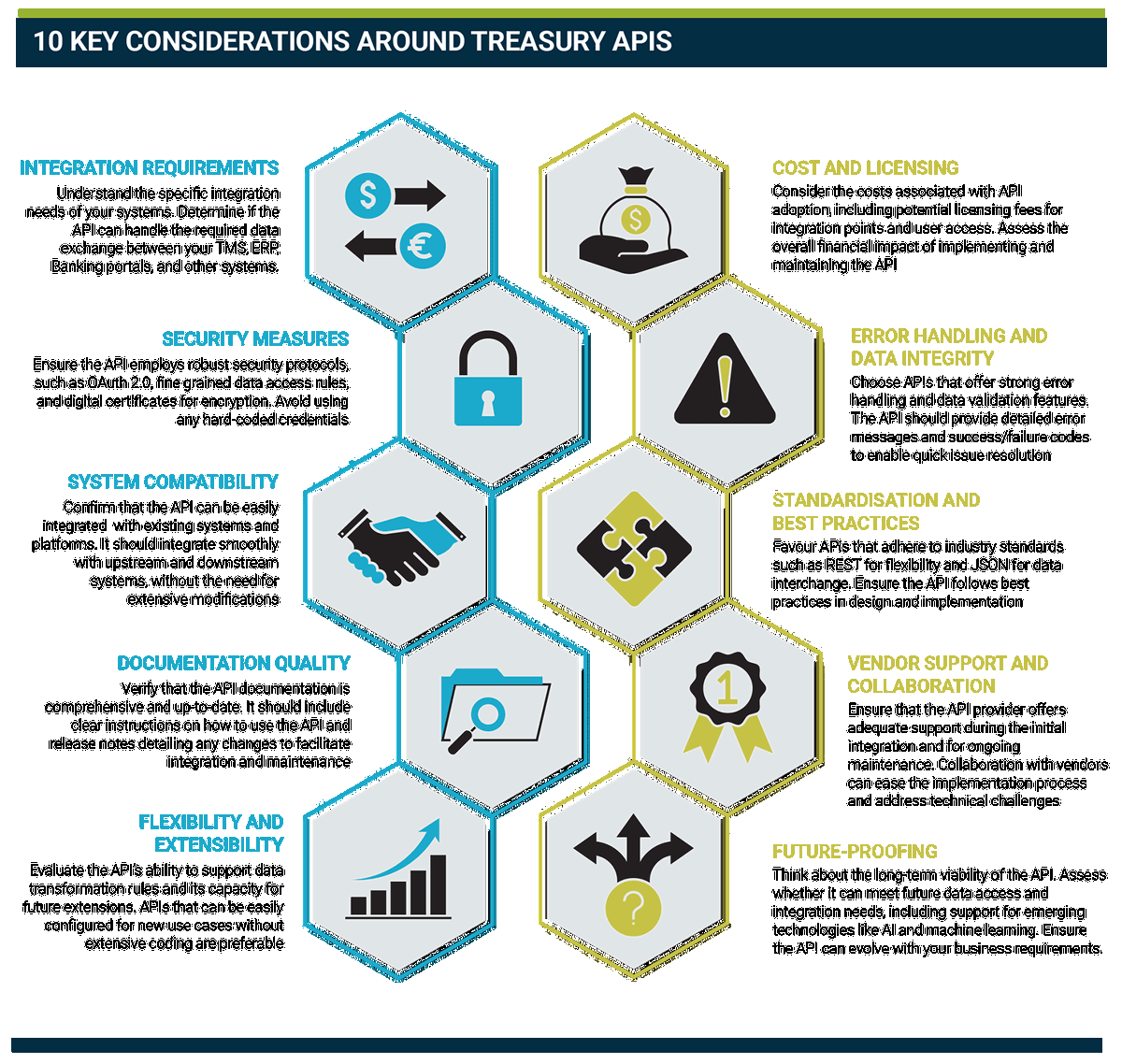

There are a few key areas where the good, the bad, and the ugly of the API world are differentiated. Harris breaks them down as follows:

- Coverage: Does the API support access and integration for all your data needs, present and future? “Many APIs operate at a database level, limiting access to data that is physically stored. However, some APIs additionally enable access to calculated data via the system business logic.” This, he explains, includes ‘on the fly’ calculations that, for example, go beyond a simple cash balance enquiry across several individual currencies, to an aggregation of cash in a single operating currency; this requires the API to access to up-to-date exchange rates and convert to the operating currency.

- Data integrity and error handling: When using an API to integrate data from an upstream system, it is important that the API provides validation of the data, and returns standard success/failure codes and failure details, in a manner that the upstream system can interpret and act on. “If something doesn’t work as expected, it’s important to have enough information to be able to deal with it quickly,” says Harris. “If an account code is invalid or there’s not enough cash in the account, then the API should be able to report that, so that the calling system can generate an alert for the appropriate individuals to take the appropriate action.”

- Flexibility: Does the API support data transformation rules? This can be extremely important and save a lot of effort by providing a means of transforming the data from the format that an upstream system exports natively, to the format that the downstream system imports natively. “If a system is importing payment data from five different banking systems, then those five different systems will quite likely natively export payment data in five different formats,” notes Harris. “So rather than having five integration points or payments APIs, use one that has the ability to transform from one format to another. This may require a degree of customisation and programming if it’s not encoded into the TMS or part of an integration layer that can be modified specifically around that integration point.”

- Ease of use: Can the API be easily integrated with upstream and downstream systems? This is a question of standards, explains Harris. “If an API follows the OpenAPI specification for documentation, OAuth 2.0 for authorisation, and REST, then it should be quite easy to use. Generally, a treasurer will not need to be immersed in the details of these, but they are worth understanding from the perspective of best practice.”

- Extensibility: Is the API fixed and only improvable by programming, or can it be extended by a simpler act of configuration? “Most APIs will support data filtering and grouping, for example, but some systems also allow new APIs to be defined within the system and made accessible, subject to authorisation, by configuration,” notes Harris.

Convenient truth

One general advantage of APIs is that they enable treasurers to perform easy follow-up actions on requests, says Leverett. “If a bank statement is missing from a file [in csv format, for example], currently most treasurers will need to make a phone call to the bank, to chase it up,” he explains. “With appropriate workflow built around an API, the treasurer has the option either to simply make another request for that statement, or message the bank requesting action.” This system-to-system dialogue can be automated.

And although APIs may have a greater upfront cost than file-based integration in terms of build and development, Leverett suggests that once that is up and running, the ongoing cost of it should be less, and with many more advantages in terms of efficiency and security. “APIs enable more logic to be brought into data integration, making it possible to build a full suite of actions to complete a process,” he says. “But there’s much more that can be achieved.”

Indeed, both he and Harris are currently working on a system, based entirely on REST APIs, that will offer full treasury functionality through a web application, as well as extensive direct integration using the same APIs. It is, says Leverett, “perhaps more than any treasurer would initiate as an integration with another system, but it can be made as simple or complex as required”.

In that process, an API offers specific features, and these can be built upon. “The first build is where you learn and feel the pain,” comments Leverett. “But after that, you’ll know all the questions to ask the vendor. And most vendors are now actively encouraging API connectivity because it’s a self-service tool that lowers their costs too: it suits them to avoid hosting SFTP [Secure File Transfer Protocol] servers and sending files, or having support staff taking calls when files have gone missing.”

As for downsides for treasurers, it’s telling that aside from the “justifiable” upfront cost of API deployment, Harris is unable to suggest any shortcomings. “Some may cite the security aspect as challenging to get right, but this comes with the territory; in fact, it is a positive that it demands close attention.”

POTENTIAL USE CASES FOR APIS

- Real-time market data feeds from Bloomberg and Reuters, with flexibility to dynamically define which tickers to request.

- Collection of latest intraday cash balances on demand, rather than relying on scheduling of intraday statement delivery.

- APIs for automation - using the TMS published APIs to automate tasks - for example automatically hedging an unhedged position using trading APIs.

- Using TMS APIs to make treasury functionality available via web, allowing for example self-service of treasury reporting either via connecting to BI tools such as PowerBI, or building within the corporate intranet.

- Data exchange with ERP systems for receiving AP/AR information and pushing accounting journals for the treasury sub ledger.

- Data exchange with a wide variety of trading, confirmation, or reporting systems.

- Automated user provisioning or changing user access rights.

Know your needs

APIs use cases will develop in three main areas, says Harris. For treasurers, system integration (bringing data from a bank portal or ERP system into a TMS or vice versa), and ad hoc and systematic data mining to maintain system integrity will be of most interest. Perhaps of indirect note for treasury will be highly interactive activities, such as building API-driven websites, or where a payment approval facility is required to share with other entities.

In terms of technological advances, unsurprisingly, AI will have an increasing role to play in combination with APIs, as solutions move beyond the current trend for cash forecasting tools, and into areas such as matching, reconciliation, and payment anomaly detection. With AI needing a vast pool of data on which to train, the value of APIs in collecting that data is obvious.

The future, as predicted by Leverett, will increasingly see the withdrawal of the option of receiving a file; instead he believes that connecting to an API to obtain information will be the only practical way. “The reason integrations with machine learning hubs are by API is because file-based data flows are just too fragmented,” he comments. “Bank statement delivery by file operates to schedules only, but with an API, a request can be made anytime. It’s a much better service offering.”

With the best APIs “not just wrapping around old technologies, but creating new approaches”, Leverett says a treasury starting to consume APIs now “should expect to receive more benefits, and more features in the future”.

But in taking this path, it’s clear that while most vendors have an API offering, not all APIs are the same, says Harris. This is not necessarily a criticism, as different capabilities are required. But this is why it’s important that every treasurer thinks about their future and not just current needs.

“Whether using an existing API, or selecting a new TMS where API capability is a big part of the requirements, it’s important to think about all the questions raised above. Can I access all my data? How easy is it to use? Does it provide error handling? Does it follow the standards? Am I happy with the security? How future proof is it? These will be matters that take them through the next 10 or 20 years.”

By answering these questions, treasurers will be making sure they not only know what the benefits of APIs are but that they are also achieving those benefits. As both Harris and Leverett conclude: “Just make sure the technology works for you, not the other way around.”